A data pipeline refers to the steps required to move data from the source system to the destination system. These steps include copying data, transferring it from an on-site location to the cloud, and combining it with other data sources. The primary goal of a data pipeline is to ensure that all of these steps occur consistently with all data.

Cleverly managed with data pipeline tools, a data pipeline can give businesses access to consistent, well-structured data sets for analysis. Data engineers can consolidate information from numerous sources and use for business purposes by systematizing data transfer and transformation. For example, an AWS data pipeline allows users to freely move data between on-premises AWS data and other storage resources.

Data pipelines are useful for getting and analyzing data insights accurately. The technology is useful for people who store and rely on multiple data sources in silos, require real-time data analytics, or have their data stored in the cloud. For example, data pipeline tools can perform predictive analytics to understand potential future trends. A production department can use predictive analytics to know when raw material is likely to run out. Predictive analytics can also help forecast which vendor might cause delays. Using efficient data pipeline tools results in insights that can help a production department optimize its operations.

Although ETL and data pipelines are related, they are quite different from each other. However, people often use the two terms interchangeably. Data pipelines and ETL pipelines are responsible for moving data from one system to another; the key difference is in the application.

The ETL pipeline includes a series of processes that extract data from a source, transform it, and load it into some output destination. On the other hand, a data pipeline is a somewhat broader terminology that includes the ETL pipeline as a subset. It includes several processing tools which transfer data from a system to another. Though, this data might or might not be transformed.

Precisely, a data pipeline purpose is to transfer data from sources such as business processes, event tracking systems, and data banks, to a data warehouse for business intelligence and analytics. Rather, data is extracted, transformed, and loaded into a target system in an ETL pipeline. The sequence is critical; after extracting the source data, you need to fit it into a data model that is generated based on your business intelligence requirements. This is done by first accumulating and cleaning data, and then transforming it. Ultimately, the resulting data is uploaded to your ETL data warehouse.

An ETL pipeline typically works in batches, meaning that a large chunk of data is moved at one time to the target system. For example, the pipeline can run two or three times daily, or once a specific number of hours (six, ten or twelve, for instance). You can even organize batches to run at a specific time every day when there is little system traffic. Conversely, a data pipeline can also be run as a real-time process (so each event is handled as it occurs) rather than in batches. During data transmission, it is handled as a continuous stream suitable for data that requires continuous updating. For example, to transfer data collected from a sensor that tracks traffic.

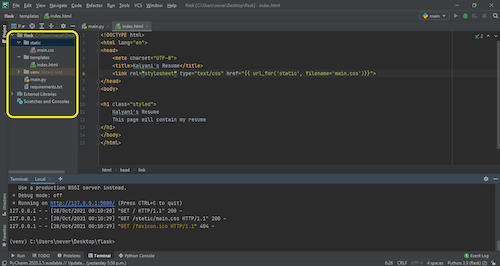

Creating pipelines with Python and Scikit-Learn

The workflow of any machine learning project includes all the needed steps to build it. A proper ML project basically consists of four main parts:

- Data collection: this process depends on the project; it can be real-time data or data collected from various sources such as file, database, survey and other sources.

- Prior data processing: Generally, within the collected data, there is a lot of missing data, extremely large values and disorganized text data, which cannot be used directly within the model; therefore, the data requires preprocessing before entering into the model.

- Model training and testing: Once the data is ready for the algorithm to be applied, it is ready to be introduced into the machine learning model. Before that, it is important to know which model will be used, one that could have a good performance result. The dataset is divided into 3 basic sections: the training, validation test sets. The main goal is to train data on the train set, tune the parameters using the “validation set”, and then test the performance test set.

- Evaluation: this is part of the model development process. It helps to find the best model that represents the data and how well the chosen model performs in the future. This is done after training the model on different algorithms. The main motto is to conclude the evaluation and choose the corresponding model again.

ML workflow in Python

The workflow execution is similar to a pipeline, that is, the output of the first steps becomes the input of the second step. Scikit-learn is a powerful tool for machine learning, it provides a function to handle such pipelines in the sklearn.pipeline module called Pipeline.